Because this course has a gate for the Exam It seems like I wont be able to write it. It really bums me out, but I'd thought I'd take the time to make a blog about everything I've experienced in this course.

First year had my hopes up, being a game design course makes it sound pretty fun. It had its ups and downs, courses like Business of Gaming and the writing course. They had teachers who understood the students perceptive. Communication was key and every student knew what was going to happen in the course and what they had to do. Then there was stuff like Algorithms and game physics, marketing, and entrepreneurship. Classes that tried, but a lot of students left feeling bitter about it. Some of those courses didn't even come back, some good some bad. Overall first year wasn't a let down, but it wasn't crystal clear. It became apparent that GDW was a separate course, even though it was worth 25%. The problem with GDW is that the connection only goes one way, your game is part of your classes, but your classes never teach you how to make a game. A lot of the course was learning on my own online, and I later learned that was a large theme of the course.

Fun little mention here, our marketing teacher called us all "suckers" for having android phones, and said that consoles where dead and that there would be no next gen. She had no place teaching us about the industry.

I can't mention first year without a shout out to Tassos and Emilian. These guys single-handedly passed a large section of this course's students. Our year was known as the "year that didn't get pointers" and it's still known as that today. I swear Ken Finney said it himself. Tassos and Emilian ran an "optional" tutorial outside of class in the game lab to help us first years get what we needed. Let me say the only people who got this far in the course showed up to those tutorials. Thats a serious problem, the only people getting through your course are people who managed to go out of normal school regulations, to get help from older years. Help that may I remind, Tassos and Emilian didn't have to do so thank you guys.

Second year, my hopes where less, but not small enough. I got introduced to Hogue and his "land Mine" courses. Fail either Graphics or animations and you might as well drop your year, cause you have to start over. This was intimidating, what didn't help was Hogue's attitude towards student failure. Personally I was a programmer at the time, and those courses didn't really effect me, but I saw it effect others a lot. How Hogue taught wasn't teaching, it was a challenge. He'd take basic concepts and theories and talk about them, and then threw the technical part to yourself. Sure the TA went through coding, but that didn't really relate to anything that the Hogue part of the course was teaching. This pretty much applied to both graphics and animations.

There is no way I can let Game Design slip by this rant. That course had two options, to be good at a technical standard, or be good on a personal level. Students wouldn't of minded a Game design Course of long write ups, or one about just making games and enjoying your time. The course was neither, it was run by a TA who didn't know what she was doing and the origination and thought of the course was so bad it failed a lot of people and gave a lot of students who had 80% averages much less. Not to mention that the year before us had the same class with less of the problems. When there is a comparable example with the same name it makes a course look bad. A lot of my year's students agreed as class turn ups got close to the single digits late in the semester. Fun Fact: I failed game design last year, This year I'm taking the second year Game design, taught by Pejman. The third years are taking the SAME course with Pejman. By failing game design i still got to take the next game design. Some true design flaws.

There was also the accounting and entrepreneurship courses, whats not to love. Professors contacting the class on blackboard saying "Is anybody gonna show up today, i don't feel like going". Or accounting teachers doing the usual scam of having a new edition of the textbook each year, all written by them. These Courses really added a bad smell to the rotting body that the course was showing.

There is one really easy way to clean up this course and it all revolves around the most important part of it, GDW. The disconnect of this class really leaves a bad taste in the mouth of the 1st,2nd, and 3rd years I've talked to. They've stayed they really felt like the game was really thrown on them, and this leaves the game to be made by the lead programmers. What this course needs is a solid ground on the GDW, so far it really doesn't fit. The 25% in each class should be something entire classes should cover, they should have different sections for each member of a group based on there roles. They're thrown off as this mandatory thing the class needs, but they should be bigger than that. And if a class can't find a way of incorporating all the group members and their jobs, that class shouldn't be part of the curriculum. An Example would be this Game Engines class. looking at the homework theres tones of work you god split between artists, programmers, and level designers. Even producer wouldn't be left out by adding some sound design. If GDW was that connected it wouldn't feel like the job it does now. GDW feels just like a separate thing thats added to your issues in any course and thats a problem.

I hope this course grows into something more tangible and workable. There are many students I know that deserve to make it this far and didn't, and thats a problem I hope they learn from and fix in the future.

Wednesday, December 3, 2014

Friday, November 28, 2014

Bloooooggggggggg

OMG I'VE BLOGGED SO MUCH, 10 game dev blogs, 10 philosophy blogs, Thank you Saad, 5 should be the standard. I have to say, thinking of what to write about is probably gonna take longer than writing this blog. Game engines is a weird hybrid of what I imagine two people debated about us learning. Someone wanted to teach us how to make our own engine, and someone wanted us to use a pre-built one. So they gave us a course where we use a pre-built engine by the guy who made the engine, so we get to have some of the process or ate least the theory of making it. Instead of learning how to make engines, we learn general infrastructure which I would say is a step of a flight of stair for making engines. Otherwise it really feels like Hogue's courses but replace spritelib/openGL with 2Loc. Fingers crossed for the Exam.

This semester is coming to a close and the game's key components are all in the bag. Next semester is going to be just simple game balancing and content addition. Well of course some networking features as well. There is where the fun is gonna be. Fiddling around with game basic design and mechanics is my favorite part of programming personally. Honestly I use to sit around computers class and just practice making Space invader A.I, and screwing around with how my ship interacts.

Before that though I have to worry about some homework questions, if all turns out well, I'll complete the hard versions of the physics, Pong, and solar system questions. The physics question should be no trouble. Simply import 3 models, a car, barrel and bullet. Spawn both cars, cannons position is related to the car, but its rotation is not. Some simple ball launching physics, nothing more complicated that a quadratic formula based on cannon angle and power. from there a simple collision box around both players and that ones pretty much done.

Pong can be seen as a harder one based around the neon glow. I'll also have to change the ball color, but that's an easy one. The only way I know of doing the neon effect is to create some sort of section that renders as glowing ( on a more complicated model you'd do a glow map like a normal map) from there you'd have a render pass just for blurring out the areas that are suppose to glow and have that on top or underneath your final render.

Finally the Solar system is just a lot of little work, having the text show up, selecting the models with your mouse. Nothing overly complicated.

1.5 Years left woooooooo.

This semester is coming to a close and the game's key components are all in the bag. Next semester is going to be just simple game balancing and content addition. Well of course some networking features as well. There is where the fun is gonna be. Fiddling around with game basic design and mechanics is my favorite part of programming personally. Honestly I use to sit around computers class and just practice making Space invader A.I, and screwing around with how my ship interacts.

Before that though I have to worry about some homework questions, if all turns out well, I'll complete the hard versions of the physics, Pong, and solar system questions. The physics question should be no trouble. Simply import 3 models, a car, barrel and bullet. Spawn both cars, cannons position is related to the car, but its rotation is not. Some simple ball launching physics, nothing more complicated that a quadratic formula based on cannon angle and power. from there a simple collision box around both players and that ones pretty much done.

Pong can be seen as a harder one based around the neon glow. I'll also have to change the ball color, but that's an easy one. The only way I know of doing the neon effect is to create some sort of section that renders as glowing ( on a more complicated model you'd do a glow map like a normal map) from there you'd have a render pass just for blurring out the areas that are suppose to glow and have that on top or underneath your final render.

Finally the Solar system is just a lot of little work, having the text show up, selecting the models with your mouse. Nothing overly complicated.

1.5 Years left woooooooo.

Friday, November 7, 2014

The Province of Poutine

This week I want to talk about MIGS, like I imagine many people in the course are blogging on this week. I want to go over what events I plan on going to and why I'm going to them. Once you see them it will be apparent that I'm avoiding some of the more technical events especially ones involving industry marketing and accounting.

First thing on the list is Hand-Drawn Animation and Games,being presented by Eric Angelillo early November 10th. Eric is an artist that has worked on a few projects and has a very awesome cartoony art style. His work can be seen here http://www.ericangelillo.com/. I'm personally interested in improving my artistic skills because I've been focusing on programming for so long. Sprite work has been pixel are for a long time now, games that feature vector art are a new craze that a few indie developers have jumped on. I hope things like Ubisoft's 2d art engine are mentioned and things like CupHead are shown.

After that I've decided to go to Intro to Unreal Engine 4 by Zak Parrish, the description sums up why i want to go to this one. "This beginner-level session introduces the toolset and key features of Unreal Engine 4. Attendees will gain a solid understanding of what features of UE4 can best help their games stand out in an increasingly competitive environment. Covered topics include Blueprints, Materials, level construction, and more."- link. Zak is the technical lead Writer of epic games and unreal. Hes pretty well known and this class taught me that learning about an engine from its creator is pretty interesting. Similar to this I also plan to go to the Unity 5 presentation. As much as i understand creating your own engine I feel I handle much better with things like unity. I pick up coding and understanding the workings fairly fast when working with engines. I really want to see what the improvements are and comparing the 2 industry beasts in size, strength and affordability. I actually plan on doing a blog about it sometime after.

Other than that there are only a few I'd like to reference. The State of PlayStation conference is incredibly interesting to me. I've been a long time customer of Sony, through the ups and downs, and I'd really like to know the direction there going. They are also one of the only large publishers i wouldn't mind working for. They have resonantly gotten a big interest in indie development, there subscription service PlayStation plus has been a way for them to pick out the best of the best indie games and give them to all there users. Level Design and Procedural Generation by Tanya Short, is in the same vain as hand drawn animation, something that has been popularized by indie games that still has endless opportunities. Creating a random game that creates complex movements is a true achievement of game design in my opinion. It helps build immersion but at the same time helps give each player a unique personal experience. I'd love to see some concepts and theories on that topic.

Finally I'd like to go to the Crash Course of The Psychology of Indie Development by Daniel Menard. He is the CEO of Double Stallion games, the company Eric Angelillo works for right now. It seems like most people's plans out of this course is to either look everywhere for jobs or to grab friends met in the course and start indie teams. So having some prep for dealing with true indie development would be nice. I am a big fan of psychology and its theories so I wont be bored through this one.

I have to say Overall I'm pretty hyped for the whole event, cant wait to see who I meet and what I learn.

Friday, October 17, 2014

Onwards and Upwards

The school year has been moving along and so has my groups GDW game. Since the scope of design is our lead programmer already rigged up a quick project in 2Loc that lets us load in maps from the Hammer map editor and move with FPS controls. So prototyping has been going great so far and the game is already taking shape.

He also got some pre-baked lighting going on as well. Since hammer Exports to maya map creation is a breeze. Movement including wall running(still being worked on), and bullets working. As I said in my last blog working on this game asset wise is going to be a casual ride, but there is a lot of work to go into design. Last Blog I also mentioned that the indie game Towerfall had infinite looping levels, where one side leads the the opposite. Like the sides of Pacman. I mentioned that its a lot harder to do in 3d than in 2d, today's blog im going to go over the basics of incorporating a system like that.

I'd like to take portal as an example and explain where I'm basing the idea to create these infinite looping spaces. Most people know the base idea of portal, but to sum it up, the two circle are direct portals to each other, go in one come out the other. The inner workings of the game had me puzzled for a while, but after some developer commentary I grew an understanding. Don't quote me on this but I believe the commentary talks about 9 different Versions of the world while playing portal. The room you see above would be copied over 9 times, each with their own version of the player and its own version of the portals. Once a portal is shot, a version of it is put into every scene, and once the second one is shot the scenes combine. When you see a copy of your character in portal, not through a portal but a portion of your character in two different sections of the world, say legs sticking out one side and body on the other, you are seeing a drone, not the true version of you. Its just another player model thats following your exact movements, but from that position. Portal Obviously fakes its connectivity. I can't say the same for Portal 2, but i'm very confident that this is how its done in 1.

Now with my groups game we don't want to incorporate an actual portal system. We where planning on having maps simply loop one or two sections to each-other. Lets say I make a completely vertical Map and leave the bottom and top open and symmetrical. From there I create 2 copies of that map on top and on the bottom of the first one. Assuming I dont let you see into infinity, 2 extra maps should be enough to set up a loop. This is where controlling entities comes into play. If I load 3 maps, connect all 3 all i've done is increase the length. But if I load 3 entities of the player in the exact same place in the 3 maps I have a different story. The player would start as the player character in the middle map. The other two version of the player would take the exact same place in the other two maps. When the player goes close to one of the edges of the map, The copy on the the opposite map will go close to the edge of the original. This is how the loop works. Whenever the player trys to leave the map though its 2 exits, a copy of him is entering the map the original was in. By simply switching the camera from one copy of the player to the other the player will never be able to reach the end. Also since there are copies of the player in the other maps, a second player can be added without having to worry if the original player is in the right copy of the map. There will always be a version in the center.

Overall Its going to require some solid code to keep each entity in line to create multiple copies of the scene. An Entity manager that selects one version as the true one and keeps the others as drones would help the process. Overall I'm pretty hyped to try this out, so wish me luck.

Friday, September 26, 2014

Year 3 - Game Dev, still going

Another year another class that's trying to kill me. I welcome game engines with open arms, I've fought through the year of Hogue and I'm up for its challenge. So far so good, they've learned from homework systems, With due date a student understands when material will be introduced to them, and have a community of students all on the same page. So far the lectures have become large tutorial videos explaining some core mechanics of 2LOC, and how we should use them. A new collection of pointers to play with, and a series of exceptions systems that I'd usually save for clean up when writing code. Lectures consist of lengthy explanations of simple review and advanced theory, combined with a slide or two of lifesaving syntax. The syntax shown is so important because "Googleing" isn't really an option with 2LOC. This isn't as much of a problem because the people teaching lectures and tutorials are easy to contact. I plan on completing my 4 easy questions before the due date. To sum up the course so far its going good.

Since we haven't gotten very deep in content yet (last tutorial was setting up a camera) I thought I'd talk about some concepts I've been going over for my GDW team. As of resonantly our game has gotten a complete override, we've completely ditched our last idea because we felt the scope was much to large. We have many skilled people on our team, but we felt the design side would prove to be to much for a few students in a year time. We decided to go with our back-up idea of making a FPS game with fighting game like combat. We thought that we could still put some work in as far as art and content goes, but the real reason we changed was because this game direction will let us make the game much more fun. What I'd like to talk about in this blog is why we think something thats competitive is going to be more fun, but also how making it properly is harder than you'd think.

My Favorite genre of video games is fighting games. They are usually the hardest to make, taking the normal requirements of good art and programming, but also the challenge of getting multiple people to face off. There is a winner and a loser, but since this is a game you want both to have fun. You need a combination of balance and understanding of skill. With a cooperative or singular experience, you have a player or two going towards the same goal and for the most part experiencing the same things. Even with large multiplier games where you have teams facing off against teams, the vastness of the differentiation of skills and choices allow some balance to come naturally. With 1 vs 1 fighting games the story is different. Both players may start the same, look into the game the same, but the difference comes when you have compare their individualism. It can be as simple as people picking characters suited to them, to people just orientating towards certain playstyles(offensive defensive). These factors make balancing a game harder, because its hard to take two different players, with different variables and skill level and making the game feel fair.

I can't count the amount of times I've seen people lose interest in a game because someone beat them too hard, or they felt cheated in defeat. Recently competitive games have found a way around this factor and that has been simplification. Games like League of Legends, towerfall, and Nidhogg. Taking RTS's and simplifying them to 4 buttons per character, taking a shooting game and simplifying it to 2D, and taking a fighting game and simplifying it to two characters of equal strength. These games follow a play method of easy to learn difficult to master. It just works better with the general population. I personally like it more when I can chose my own fighter and hes his own original thing. That is because i think individualism in fighting games, allowing a defensive player and an offensive player to thrive, lets a game have more depth.

I'd like to talk about one of the games i mentioned specifically, Towerfall. Its where the majority of our game idea comes from. Its a 2-4 player game that involves Archers facing off against each other. Each archer starts with 3 arrows and they can jump, dash or shoot arrow. Jumping is used for movement, but jumping on opponents heads is a way to defeat them. dashing makes you invisible for a short period of time, moves you fast and allows you to catch arrows but is limited. Finally you can shoot arrows to kill opponents and you can pick them up by walking over them after shot. Its a pretty simple game, but we're pushing it to the 3rd dimension and making it a FPS. I'd like to go over the things we're going to face in this blog.

Guns with limited ammo that must be picked back up, a movement system, and a parry method. Now FPS makes some problems real quick, first off multiplayer on FPS is usually on different screens. This means we are going to have to look into the online functionalities of 2LOC. This is a Fast paced game and if packets can't be sent from computer to computer fast enough we are going to have to limit it to split screen or use "dirty" methods like prediction based on player gameplay. Personally I think that systems that some FPS use are absolutely terrible. Its the idea that if the host isn't getting the packets in time, the game will fill up the missing information with assumptions based of what was last known. Thats why in some games when you lose connection you see players continue to walk into walls. I personally hate this method, and when similar methods are found in fighting games its considered blasphemy from fan bases. Theres also a lot of gameplay problems that come from just porting one idea to the other. For one, with limited ammo in an FPS collecting ammo is completely different from something like TowerFall. Towerfalls map loop, like pacman, so technically nobody is ever actually above or below somebody else. In an FPS creating connecting segments like this is much harder to create in a full 3D game. Without these connecting maps you encounter a problem with limited ammo. All of a sudden Jumping is unbalanced, if somebody shoots while you're in the air, their bullet hits the ceiling, traveling way farther away then someone shooting at the ground. its now also harder to get to this bullet now that the maps don't loop. Also aiming is harder in 3 dimensions than 2. The transition to 3D is going to cause a lot of reimagineing of this idea and I know my group and me can pull it off

Monday, April 21, 2014

Exam Review

So Last semester I wasn't too ready for the final exam, I underestimated it and got hurt because of it. This time around I've been getting ready to the point where I've started looking ahead to knew engines and shaders in areas that weren't totally covered in class.

Lets not have a repeat of this.

I wont be covering the most basic stuff, I know the graphics pipeline, and how meshes are made, thats covered in my midterm review. As well as normal/ displacement mapping and toon shading.

A quick not is the 5 steps to HDR bloom, render to FBO, Tone map the FBO, Gaussian blur(Convolution kerning) and then render the blurred version with the original FBO.

Motion blur is done by using a accumulation buffer, rendering a bit or the last few and later frames.

I've gone through tons of code on particeleffects in shaders. Velocity based shaders are also done by rendering frames ahead and then subtracting their values. The ins and outs of deferred shading and lighting, how its done, and why we use it. A whole bunch of shadowmapping code examples. Threshold through photoshop tutorials, and over all just playing around with new engines and their shaders is what i've been using my time on

Unreal engine

Last blog I went over shaders in Unity, today i want to take a look at the other U engine, Unreal. Once again using the official tutorials. While writing shaders in manually is possible in Unreal (actually ShaderDevelopmentMode, is disabled by default) I'm going to go over the material editor, and lighting.

Heres a quick look at a simple version of the materiel editor being used, with GUI more common in hardware development, the properties of a material are set up this way in Unreal. On the left we have a big box with a lot of inputs. Thats the material, and its name can be seen on top. The inputs cover a large portion of data and maps. Texture, spuclar and a normal map have been applied simply by loading in the texture and connecting it to an open slot on the material. Theres also use of speed tree, a program used to make quickly randomly generated trees. In this case its connected world position offset, which lets speed tree change the world space of the vertices.

Honestly at fist glance this system looked great, easy to read and understand and it covers a lot of the basics for shading materials. Once i figured out about Material functions it was pretty great. Material function are small material shaders that you can create to make an even simpler experience when using the material editor

The example here shows a screen blend function, taking two layers and blemding them together. Now you can downsize all these windows into a single window, from the inputs to the output. This allows anybody to use your effect/shader with ease. The materiel editor is an awesome little feature that makes creating effects super easy, it can even use premade math function including things like Lerp and some you see from .glsl's like Clamp.

There are 4 kinds of preset dynamic lights in unreal and those are Directional, Point, Spot, and Sky lights. the reason i did this blog on the material editor and lights is because lights have light functions that can be edited just like materials

I didn't mention that Unity has a similar approach to materials, but from what i've seen the Unreal engine's cleaner and simpler and is good for implementing the simple shaders that I'd use any day, but Unity still has its uses, allowing you to code in shaders by yourself easier.

Sunday, April 20, 2014

A look at Unity

I've decided to look into the shader options of unity since its already on our school laptops and i wanted to mess around with a fancy engine. Everything I'm looking at today i got off of unities help area/website. First thing i got to say, theres more than 80 built in shaders and shaderLab as a way to create more of your own. Shaderlab lets you add surface, vertex, fragment, and fixed function shaders, while the preset shaders revolve around vertex-lit, diffuse, specular, bumped diffuse, and bumped Specular. From there the built in shaders split into 5 parts; basic, transparent, transparent cutout, self illuminated, and reflective.

The first shader I'm going over is Parallax Bumped Specular, sounds complicated, but its just normal mapping with better depth. Its adds a height map from the normal maps on the object and creates greater depths on rendered objects. Makes these stones look like the have really deep rivets. The height maps are achieved by getting alpha maps from the normal maps where black is no depth and white is max depth. The specular portion is based of the blinn-phlong lighting model. The reflective and highlighted part on the left is a simulated blurred reflection of the light. The Shininess slider effects the strength of the reflection. A more complicated version of what what we've done in tutorials, but still not that complex.

The farther you get in the list the cooler stuff gets. First on the left we have a transparent version of our last shader, the transparent values of the object are taken from the alpha channels of the main texture. So its works just the same as transparency for sprites. Next the middle picture its self illuminating a cool simple shader, you add a texture to be the illumination map, based on the alpha values, black being no light and white being max light. The white sections light up no matter how much light is hitting them, so it creates a glow in the dark effect. Finally reflection on the right, its a simple reflection and it requires a cubemap to actually show a reflection. Like before with illumination, the strength of the shader is based on the alpha channel of a texture, and once again, black means no reflection and white means full reflection.

Another shading method that can be used in unity is the Surface Shader. The surface shader interacts well with Unity's lighting pipeline well so its priority use is for shaders that deal with lights. It doesn't have its own language like GLFX, but it can be used for some interesting effects.

Heres an example of one surface shader. This effect is created by moving vertices along their normals. The surface shader is able to do this because data from the vertex shader can be passed on to the surface shader which goes pixel by pixel. it can be summed up simply with this line

v.vertex.xyz += v.normal * _Amount;

where "amount" is a multiplier between -1 and 1, that can be attached to a slider in unity.

I'd like to go through a few more shaders and some custom stuff made by me in unity, but I also want to check out other engine's shader options, like the unreal engine.

Saturday, April 19, 2014

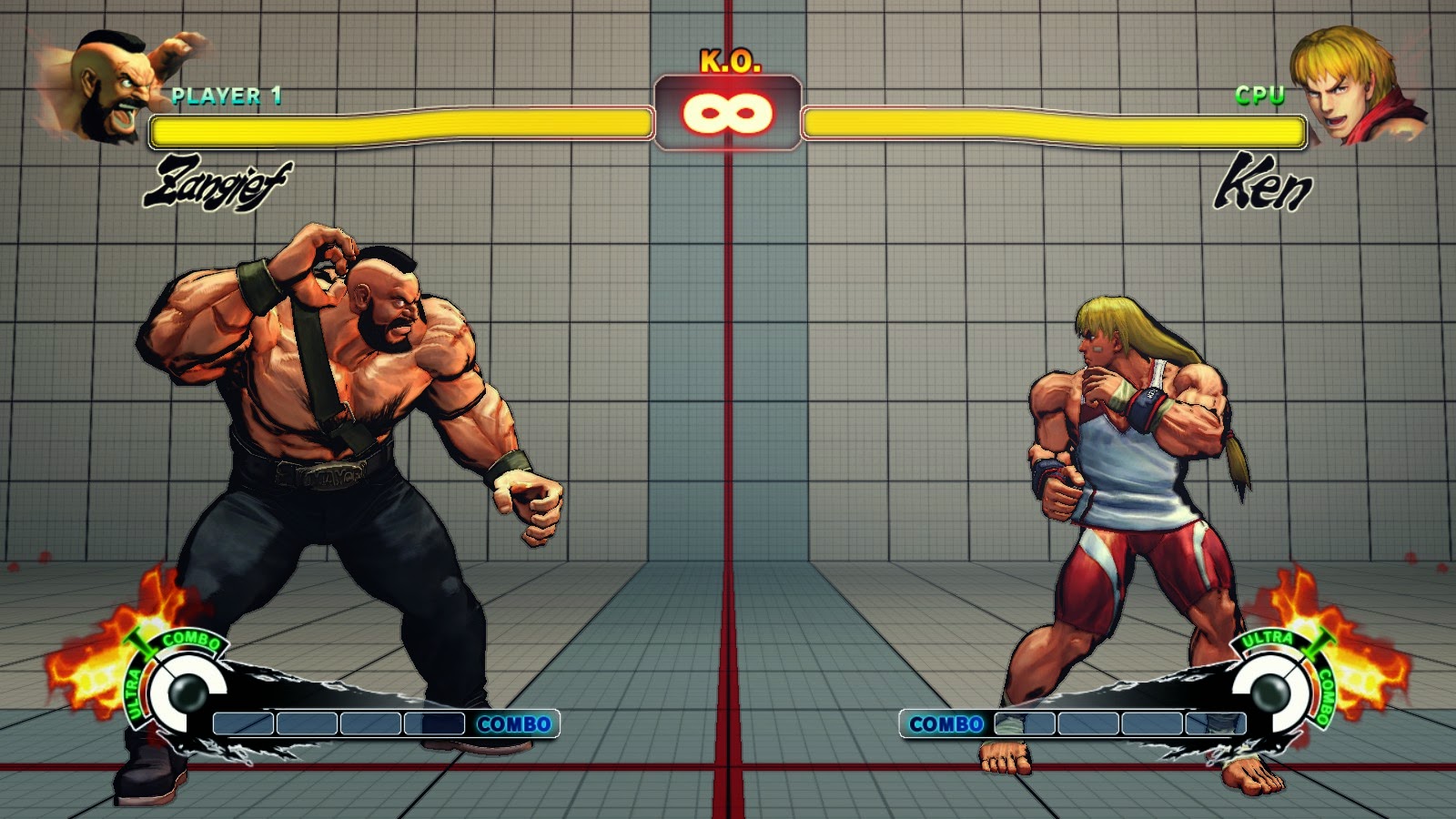

Street fighter 4

Today i want to go over the lighting effects in super street fighter 4. I noticed in the options menu that the game had 3 extra effects Ink, watercolour, and posterize. I'll be going over the three because they actually create some cool effects and street fighters art style has been talked about since its release. The first style is Ink, its the first and most used style. A lot of the concept or for street fighter 4 used heavy ink lines an example under this text. the way the effect is done is pretty simple, its the same way we did border detection for toon shading. The screenshots that i showed before of toon shading in my game used the same visual effect. By taking camera information, and normals, and calculating the area seen by the camera or light, the edge values are just the dot product of the light direction and the normals. The parts closes to the edge are around zero. Making a range around zero will highlight these edges. Heres the process reversed to see only the edges and black out everything else

Today i want to go over the lighting effects in super street fighter 4. I noticed in the options menu that the game had 3 extra effects Ink, watercolour, and posterize. I'll be going over the three because they actually create some cool effects and street fighters art style has been talked about since its release. The first style is Ink, its the first and most used style. A lot of the concept or for street fighter 4 used heavy ink lines an example under this text. the way the effect is done is pretty simple, its the same way we did border detection for toon shading. The screenshots that i showed before of toon shading in my game used the same visual effect. By taking camera information, and normals, and calculating the area seen by the camera or light, the edge values are just the dot product of the light direction and the normals. The parts closes to the edge are around zero. Making a range around zero will highlight these edges. Heres the process reversed to see only the edges and black out everything else

The next effect is watercolour, Disappointingly it the same effect but instead of changing the edges to black it changes them to a maroon like colour, its lighter than the black and it does look fairly different, but it kind of fails to really express a watercolour like visual. I'd like to point out that when a character uses a powerful move the shader goes back to the old ink look on the characters. So the border is used twice as much and the black outline becomes even greater

Finally the posterize effect heres an example of what its suppose to look like, the colours of an image chosen and created into multiple thresholds. thats makes a multicolored image into a little under a dozen.

Now this is the street fighter version, once again the same effect, but with a little difference. Street fighter already uses vary little colour differentiation and already looks like a toon like image, so the posterize effect here changes the normal border effect colour and range, notice all the highlighted areas are under the character now. This can simply be done by changing the range of the of the border select or by basing the border select off the light instead of the camera.

The effects used ended up being much lazier than i expected. They could of gone all the way with there effects, and actually made a posterize and watercolour effects.

Sunday, April 6, 2014

3D lighting in a 2D scene

An update on the game's main look so far. Decided to take out the Toon edge bolding, but kept the lighting technique. As for the hit box detection from last blog, it was pretty easy to do, but since our animator has a problem with getting animations done, I needed to drop it and attacking out of our game. All I could really show would be a screenshot with slightly red characters, its much for visible in video, but I've long since gotten rid of it.

I also added a cool lighting effect. The farther you get the darker it becomes, I have a rather large skybox background lerping from bottom to top and back again. I also have the ambient lighting lerping, now all I have to add is a sun and a moon that can slerp by and I have a nice day and night cycle. This caused a cool effect I wanted to look into. The bars of light slide around in and off screen, as you see from the first screen shot there are 4 colours, then it moves to 3, then another 3. For the most part this colours slide around, in the last screenshot you see that the darkest part has moved to the far right corner.

Now I didn't really use any code to do this, other than of course simple diffuse toonshading. I realized that this effect is coming from the large plain in the background, and it being so large that the spotlight doesn't hit it correctly. lighting on a flat plain was kind of cool to me, because the idea of 2D realistic lighting came to mind and I decided to look it up.

just some examples

just some examples

Now the first one seems pretty easy, its probably already created in the sprite, and if its not its simply an orb that adds to the colour values around the blast with a gradient edge. Now the other one blew my mind when i first saw it.

https://www.kickstarter.com/projects/finnmorgan/sprite-lamp-dynamic-lighting-for-2d-art Is a kickstarter for a lighting engine for 2D games. It can generate normal maps for sprites that can use simpe lights to create effect like the one shown. Now the hard part is being able to take in any sprite and create normal maps that work as well as this one, but the concept of how those normal maps would work on a sprite like this is pretty easy.

Of course this is all assumption, but from what it seems to me its kind of simple. The program creates 4 normal maps, a version of it lit from each side, top, bottom, left, and right. from there on 2D lighting on a 2D sprite could just be chosen based on separating x and y potions. when the light moves from left to right, it just has to trade normal map dominance. Thats why when the light is far left lit looks like the left lit normal map, and when its far on the right it looks like the right lit shown here.

The sprites lighting is pretty much transforming from left to right based on the x position. Now if you do the same for the Y and combine the two lighting in 2D should work fine. Now in the Gif the light moves behind the sprite, now of course its not actually rending how the light hits the sprite its probably something as simple as a multiplier based on the z position. The farther it is away from the base Z the dimer all the lights are. When the light comes around the left side, it slowly reveals the the left lit normal map. It kind of acts as an ambient light, even though it creates the effect 3D lighting.

Overall its a pretty cool lighting, I'm pretty sure i manage to figure out how the lighting works, but I have no clue whatsoever how it generates the normal, Depth and Ambient Occlusion maps is beyond me.

Unity seems to also incorporate some 2D lighting, but it seems to require some normal maps beforehand. Overall its an interesting topic and it can create some interesting effects.

I also added a cool lighting effect. The farther you get the darker it becomes, I have a rather large skybox background lerping from bottom to top and back again. I also have the ambient lighting lerping, now all I have to add is a sun and a moon that can slerp by and I have a nice day and night cycle. This caused a cool effect I wanted to look into. The bars of light slide around in and off screen, as you see from the first screen shot there are 4 colours, then it moves to 3, then another 3. For the most part this colours slide around, in the last screenshot you see that the darkest part has moved to the far right corner.

Now I didn't really use any code to do this, other than of course simple diffuse toonshading. I realized that this effect is coming from the large plain in the background, and it being so large that the spotlight doesn't hit it correctly. lighting on a flat plain was kind of cool to me, because the idea of 2D realistic lighting came to mind and I decided to look it up.

just some examples

just some examplesNow the first one seems pretty easy, its probably already created in the sprite, and if its not its simply an orb that adds to the colour values around the blast with a gradient edge. Now the other one blew my mind when i first saw it.

https://www.kickstarter.com/projects/finnmorgan/sprite-lamp-dynamic-lighting-for-2d-art Is a kickstarter for a lighting engine for 2D games. It can generate normal maps for sprites that can use simpe lights to create effect like the one shown. Now the hard part is being able to take in any sprite and create normal maps that work as well as this one, but the concept of how those normal maps would work on a sprite like this is pretty easy.

Of course this is all assumption, but from what it seems to me its kind of simple. The program creates 4 normal maps, a version of it lit from each side, top, bottom, left, and right. from there on 2D lighting on a 2D sprite could just be chosen based on separating x and y potions. when the light moves from left to right, it just has to trade normal map dominance. Thats why when the light is far left lit looks like the left lit normal map, and when its far on the right it looks like the right lit shown here.

The sprites lighting is pretty much transforming from left to right based on the x position. Now if you do the same for the Y and combine the two lighting in 2D should work fine. Now in the Gif the light moves behind the sprite, now of course its not actually rending how the light hits the sprite its probably something as simple as a multiplier based on the z position. The farther it is away from the base Z the dimer all the lights are. When the light comes around the left side, it slowly reveals the the left lit normal map. It kind of acts as an ambient light, even though it creates the effect 3D lighting.

Overall its a pretty cool lighting, I'm pretty sure i manage to figure out how the lighting works, but I have no clue whatsoever how it generates the normal, Depth and Ambient Occlusion maps is beyond me.

Unity seems to also incorporate some 2D lighting, but it seems to require some normal maps beforehand. Overall its an interesting topic and it can create some interesting effects.

Sunday, March 9, 2014

Unique shading situations

Well for a quick update of the game,

Cel shading and some border outline has changed the look of our game where I wanted it to go. I decided not to use Sobel edge filtering because I like the simple boderless images details on the character, and only wanted it around the characters. Today

My next task in graphics is to make an affect for attacks, so that they are more visible and give the player a good way to get warned that attacks are coming at them. Two things came to mind and they are both games with only plane of movement. First for the theory, is Metal slug. Its an old 2D side scrolling shooter and since it has a lot of stuff going on in it, enemy bullets/grenades flashed bright blue and yellow. Bullets flashing blue and yellow can be seen in this picture here.

There is going to be a lot to dodge in my game so having a good notifier is important. Now this is blog is for a graphics class, so just making a texture or a sprite with big colours isn't gonna be enough. That's where the second inspiration comes in.

Netherrealm studios have made two fighting games so far, Mortal Kombat and Injustice Gods Among Us. The games have a lot in common but I figured out they shared a feature in training mode. In both games theres a setting to have a character's limb highlight in red when he attacks with it. an example here http://www.youtube.com/watch?v=0h2a4RC5Xus , skip to pretty much any time during the video to see Scorpions hand's turn red during punches and legs turn red during kicks. It seems like the limbs turning red are chosen before hand for moves, but I' thought of a better way to pull it off. Since we are already using hitboxes i thought using them to choose what would be red would work. The basic idea is this.

Since my game is mostly on one plane, Like mortal kombat, I have a few options on how to do this effect. Probably the easiest would be just like the image above explains, but using someing like this as the base,

With some tinkering on world and normal locations I was able to just highlight objects that where in the middle of the game, The background, and wall are taken out while the rest is left. This also creates and Xbox avatar like glow effect. So any hitboxes that will highlight stuff can be sent through this shader, Simply mixing (the mix(); function) this output and an output with all the location of the hitboxes and how they would appear on screen should keep the background black, and any parts of the model within the hitbox red. This methods also adds a bit of an extra affect, if a hitbox hits something it also goes red. I plan refining this shader for next blog, and some other effects.

Sunday, February 23, 2014

Midterm Madness

Well Midterms are bringing there ugly head around, and i seem to be putting most of my studying towards Graphics. Over joyed that the midterm is only worth 10%, and is in the afternoon, morning midterms are hell.

I have been studying off a combination of old notes and the midterm slideshow, and I find that by the end of the slideshow I was happy I made some notes. For example the slideshow only contains 1 slide on toon shading, but it was almost the main point of that class. I guess hes just not having much of it on the midterm. I can sum up all the content this far as categories, i feel that writing it out will help with studying, and should make a decent blog. Listing off as follows, Basics of Computer Graphics, Textures, Basics of Shaders, Shader Examples, Lights and Shadows.

Basics of Computer Graphics-

Vertex shader, Triangle assembly, rasterization, fragment shader, All need to be known, and understood. We learned it in intro to graphics, and we had questions on it with the midterm and exam.

How 3 dimensional assets are created, seems like really simple stuff to put on a midterm review, but I guess there's gonna be something on why we use triangles over squares, (it takes more processing power).

Textures-

UV Mapping, mapping the texture to certain pixels of the 3D image. Types of textures, bump mapping - getting normal information off the texture, and displacement mapping- getting displacement information from the texture, displacing the pixels individually.

Basics of Shaders-

More specific things in the graphics pipeline, where the shaders, VBO.A and textures happen.

Shader Examples-

Like I talked about earlier toon shading is a good example. Some examples should be known, like the monocolour/colourshaders in Dan's tutorials, or edge tracing.

Lights and Shadows

This ones big, the difference between Ambient, diffuse, and secular. Toon shading is removing the gradient of diffuse and secular by making a function that only has a set amount of lights, as in only have bright and a grey. Radiosity brightens areas, then blurs them to create a soft more realistic light looks. The didn't mention it in the slide show, but we did talk about ambient occlusion which asks how many objects are in the way of the light source? And bases lighting off that.

Thats a quick summery of what I have gone over, So far this midterm seems like its gonna be a lot more theory compared to animations and intro to graphics. Wish me luck

I have been studying off a combination of old notes and the midterm slideshow, and I find that by the end of the slideshow I was happy I made some notes. For example the slideshow only contains 1 slide on toon shading, but it was almost the main point of that class. I guess hes just not having much of it on the midterm. I can sum up all the content this far as categories, i feel that writing it out will help with studying, and should make a decent blog. Listing off as follows, Basics of Computer Graphics, Textures, Basics of Shaders, Shader Examples, Lights and Shadows.

Basics of Computer Graphics-

Vertex shader, Triangle assembly, rasterization, fragment shader, All need to be known, and understood. We learned it in intro to graphics, and we had questions on it with the midterm and exam.

How 3 dimensional assets are created, seems like really simple stuff to put on a midterm review, but I guess there's gonna be something on why we use triangles over squares, (it takes more processing power).

Textures-

UV Mapping, mapping the texture to certain pixels of the 3D image. Types of textures, bump mapping - getting normal information off the texture, and displacement mapping- getting displacement information from the texture, displacing the pixels individually.

Basics of Shaders-

More specific things in the graphics pipeline, where the shaders, VBO.A and textures happen.

Shader Examples-

Like I talked about earlier toon shading is a good example. Some examples should be known, like the monocolour/colourshaders in Dan's tutorials, or edge tracing.

Lights and Shadows

This ones big, the difference between Ambient, diffuse, and secular. Toon shading is removing the gradient of diffuse and secular by making a function that only has a set amount of lights, as in only have bright and a grey. Radiosity brightens areas, then blurs them to create a soft more realistic light looks. The didn't mention it in the slide show, but we did talk about ambient occlusion which asks how many objects are in the way of the light source? And bases lighting off that.

Thats a quick summery of what I have gone over, So far this midterm seems like its gonna be a lot more theory compared to animations and intro to graphics. Wish me luck

Sunday, February 2, 2014

Harder Than it Should Have Been

Second semester is on its way, and a new class asks for blogs. The things are my kryptonite, and I don't even really have powers. For reasons I don't really feel like sharing with the world wide web writing forms like this are ridiculously difficult for me, but I got to give it a shot.

Intermediate Graphics, if Introduction to Computer Graphics, and Computer Animations had a big brother it would be this class. I'm going to sum up what I learned from week one from my perspective.

1. Most of the code in Introduction to Computer Graphics was a trick (SFML and immediate mode), you're going to have to drop it and remake your code with what we're using if you want a chance.

2. You are gonna have to stress out at least once a week, for a non code related task. As in Blogs are mandatory, and there worth a hell of a lot.

3. Homework questions are back, this time with a twist, it has to be in your framework and there marked properly. Meaning the lead programmer better get it together or everybody goes down.

4. Mr. Hogue is really unimpressed with our midterm and exam scores there worth less in this class.

Those for points have been haunting me for the last few weeks, but enough of that into actual course content.

1. Most of the code in Introduction to Computer Graphics was a trick (SFML and immediate mode), you're going to have to drop it and remake your code with what we're using if you want a chance.

2. You are gonna have to stress out at least once a week, for a non code related task. As in Blogs are mandatory, and there worth a hell of a lot.

3. Homework questions are back, this time with a twist, it has to be in your framework and there marked properly. Meaning the lead programmer better get it together or everybody goes down.

4. Mr. Hogue is really unimpressed with our midterm and exam scores there worth less in this class.

Those for points have been haunting me for the last few weeks, but enough of that into actual course content.

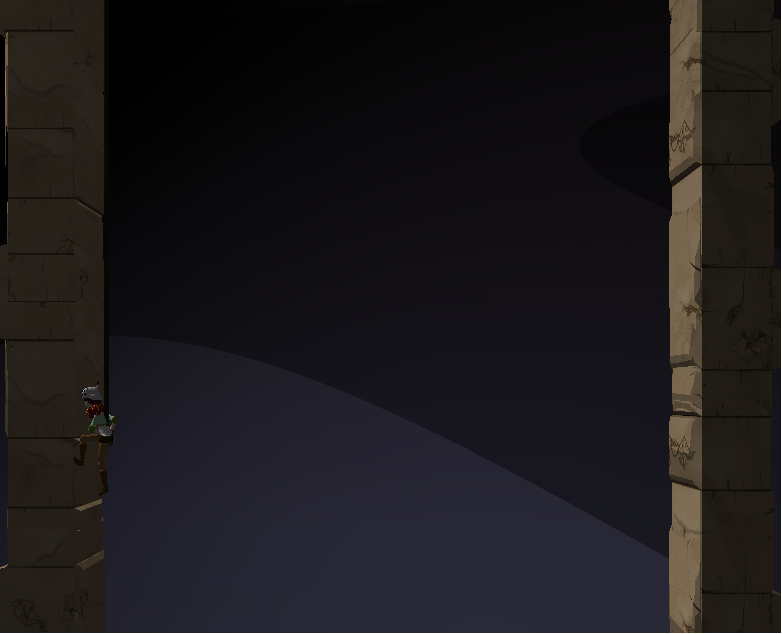

This is what our first semester prototype looks like.

So far it has a really simple art style that can be improved by incorporating pretty much anything that has been shown in class so far. To knock the big one off the list, cel shading would work wonders with our simple colors especially on our protagonist. The best comparison for a game we would like to match would be Legend of Zelda: Wind Waker

cel shading it self seemed pretty simple, We covered it week 3. instead of using a gradient for lighting, 100% being black and 0% being the original image, just average it out i.e. from 40% 100% will be grey. This end up making everything have two colours (implementing more is easy), one for the original and a darker version for shading. Seen in this wind waker picture, links cloths are green, and dark green where the shading is.

What got me really interested with the toon shading was creating black borders, using normal values to know whether something is facing the camera never crossed my mind. I'd love to see what playing with some of the ranges of that and the toon shading would look like. Especially reversing the roll of the border shading and only having color on the borders, creating a sort of highlighted edge effect I think would be awesome for certain scenes in the game. To be more specific Specular lighting, calculate the value of light seen by the viewer, if the value is close to 0 make it black. moving the range somewhere other than zero could create some weird border effects as well.

What i really want to do this semester is combine some of the shaders with some stuff we learned form animations last semester. More specifically creating scenes with a combinations of lighting effects and particles.

Two main scenes i would like to get into the game are snowing and raining. Raining is really rarely seen in cel shaded games, and usually its just a simple particle effect and not much else. I would love to throw in some lightning by making a super bright ambient light for a second, and otherwise having some really distant dark lighting, with borders highlighted like i said earlier. While for snowing I want to try using the toon border method but moving the values to somewhere in the middle and see if i can get a snow covered effect.

Another tool from this class I would like to incorporate is bump mapping, it wont really be used by me as much, but it would be great for my artists. Most of our enemies are humanoid figures and they seem to have more and more triangles. bump mapping will be nice for lowering that.

Next weeks blogs, going to be on week 4 and 5. I was sick week 4 so I've missed the classes, but I plan on doing some research on the topics in the lectures online to catch up.

Subscribe to:

Comments (Atom)